2023-07-11_Generative Pretraining in Multimodality(Emu-1)

| 2023_Sun_Generative Pretraining in Multimodality.pdf| 2023-07-11 | Quan Sun, Qiying Yu, Yufeng Cui, Fan Zhang, Xiaosong Zhang, Yueze Wang, Hongcheng Gao, Jingjing Liu, Tiejun Huang, Xinlong Wang | URL | arxiv

Input: Interleaved image, text and video

Output: Interleaved image, text

- no videos

核心:regress continuous visual tokens!

Insights

#Idea 视频训练数据:时序对齐数据?使用Temporal Alignment

为什么生成效果不好:

- 图像作为continuous token,不如discrete token

- diffusion中embedding as condition,比较不自然。

关于Understanding效果:没跟MLLM比,是否比不过?

Why Emu

多尺度算子华为GPU是否支持

资源好估计

Model

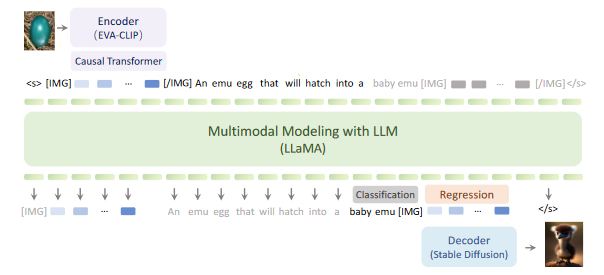

Architecture

Image Encoder, Causal Transformer, LLM, Visual Decoder

Causual Transformer将Encoder输出的image tokens转为fixed size embedding,和[BLIP] Q-Former的唯一区别是self-attention采用causal形式,更有利于Autoregressive Training

Visual Decoder:LLM output embedding as condition.

Training Objective

Stage 1: unified auto-regressive pretraining

- cross-entropy loss for discrete text embedding

- ℓ2 regression loss for continuous visual embeddings

Stage 2: Tune Visual Decoder([Stable Diffusion])

Stage 3: Instrunction Tuning with [LoRA]

Training Data&Details

Pre-training:End-to-End

- Image/Video-text Pairs

- Interleaved Image/Video and Text

Interleaved Video and Text:缩略图+字幕, ordered by timestamp,本质上等于图文交织,没有建模运动信息

Detail

针对[IMG]和[/IMG]token

- [IMG]是否作为Pretraining Objective,让模型自己决定何时输出

- 由于image token数量固定,[/IMG]应该在[IMG]之后的fixed number of tokens 后自动生成?

Eval

Zero-shot

Multimodal Understanding,两种evaluation:

- 使用了Chain Of Thought,先对Image作Caption,然后把Caption和Prompt输入模型

- 没有使用image,都先转化为文字,是否合理?

- follow Flamingo,为了控制输出格式,use two text-only examples from the task as prompts

Text2Image Generation on MSCOCO val, FID,比不过SD Imagen Parti等Model

Few-shot(in-context learning)

VQA VideoQA

Qualitative

real-world knowledge grounding, interleaved multi-image understanding , detailed video understanding, , multimodal assistant, multi-turn dialogue, image blending , and (in-context) text-to-image generation

Abstract

We present Emu, a Transformer-based multimodal foundation model, which can seamlessly generate images and texts in multimodal context. This omnivore model can take in any single-modality or multimodal data input indiscriminately (e.g., interleaved image, text and video) through a one-model-for-all autoregressive training process. First, visual signals are encoded into embeddings, and together with text tokens form an interleaved input sequence. Emu is then end-to-end trained with a unified objective of classifying the next text token or regressing the next visual embedding in the multimodal sequence. This versatile multimodality empowers the exploration of diverse pretraining data sources at scale, such as videos with interleaved frames and text, webpages with interleaved images and text, as well as web-scale image-text pairs and video-text pairs. Emu can serve as a generalist multimodal interface for both image-to-text and text-to-image tasks, and supports in-context image and text generation. Across a broad range of zero-shot/few-shot tasks including image captioning, visual question answering, video question answering and text-to-image generation, Emu demonstrates superb performance compared to state-of-the-art large multimodal models. Extended capabilities such as multimodal assistants via instruction tuning are also demonstrated with impressive performance.